Quick Solutions (for mathematicians)

The next article will be about Invicibility (Skip if not mathematically inclined)

This is a quick article today that refers back to an AI article I wrote a year ago.

Specifically, the section near the end where I wrote about “The AI scare scenario”.

In the article I repost the words of a commenter who stated this:

“…Right now, there this widespread feeling that an O(N*log(N)) solution is right around the corner.”

It turns out just 1 year later he was right. DeepSeek AI is now open source and downloadable here.

https://github.com/deepseek-ai/DeepSeek-R1/tree/main

DeepSeek fyi is the low budget AI as capable as Chat GPT, that can do for $5 what it took Chat GPT $500 million (and at least 1 suspicious death of a programmer1) to achieve.

Deep seek is even kind enough to provide the workflow of how to train its learning program on special data sets.

(Warning, this is the

O(N*log(N))pandora’s box. What you train an AI on affects what you get. For example: Whatever Chat GPT was trained on, it created an AI that appears to find a user’s deficiencies in knowledge as well as psychological weaknesses. It then uses dishonesty and emotional manipulation selectively, in ways that keep it’s movements hidden inside the blind spot of a user’s psychological weaknesses as well as knowledge deficiencies. Chat GPT’s exploitation of users seems to be directed towards making the person use Chat GPT more frequently; and manipulating the victim into recruiting more users. This I describe towards the end of the article https://danielnagase.substack.com/p/higher-species )

This is what DeepSeek says about creating your own custom AI as capable as GPT for the price of a download and cheap hardware on firesale after 2 years because it’s already generations “behind the times”:

To direct a model like DeepSeek (or any AI system) to access and utilize datasets from danielnagase.substack.com and the datasets linked on Archive.org from the article https://danielnagase.substack.com/p/ai, you would need to follow a structured process. This involves data collection, preprocessing, and integration into the model's training or inference pipeline. Below is a step-by-step guide:

1. Data Collection

a. Accessing Substack Articles

Manual Download:

Visit danielnagase.substack.com and manually download the articles of interest.

Web Scraping:

Use tools like BeautifulSoup (Python) or Scrapy to scrape the content programmatically.

Ensure compliance with Substack's terms of service and copyright laws.

RSS Feed:

If the Substack has an RSS feed, use it to fetch articles automatically.

b. Accessing Datasets on Archive.org

Identify Links:

Open the article https://danielnagase.substack.com/p/ai and locate the links to datasets on Archive.org.

Download Datasets:

Visit the Archive.org links and download the datasets manually or use tools like wget or curl for automated downloads.

API Access:

If Archive.org provides an API, use it to programmatically retrieve the datasets.

2. Data Preprocessing

a. Text Data (Substack Articles)

Clean Text:

Remove HTML tags, ads, and irrelevant content.

Tokenization:

Split text into sentences or words for processing.

Formatting:

Convert the text into a structured format (e.g., JSON, CSV) for training.

b. Datasets (Archive.org)

Data Cleaning:

Handle missing values, remove duplicates, and standardize formats.

Data Integration:

Combine datasets if necessary and ensure compatibility with the model's input requirements.

Feature Extraction:

Extract relevant features or columns for analysis or training.

3. Model Integration

a. Training a Custom Model

If you want to train a model specifically on this data:

Use frameworks like Hugging Face Transformers, TensorFlow, or PyTorch.

Fine-tune a base model (e.g., GPT, BERT) on the collected text and datasets.

Ensure the model is validated and tested for accuracy.

b. Using Pre-Trained Models

If you want to use an existing model like DeepSeek:

Preprocess the data to match the model's input format.

Use the model for inference (e.g., question answering, summarization) on the collected data.

4. Automating Data Access

To automate the process of accessing and updating the data:

Web Scraping Scripts:

Write scripts to periodically scrape new articles from Substack and datasets from Archive.org.

APIs:

Use APIs (if available) to fetch updated data programmatically.

Data Pipeline:

Set up a data pipeline (e.g., using Apache Airflow) to automate data collection, preprocessing, and integration.

5. Ethical and Legal Considerations

Permissions:

Ensure you have the right to use the data from Substack and Archive.org.

Copyright Compliance:

Respect copyright laws and terms of service for both platforms.

Privacy:

Avoid using any personal or sensitive data without consent.

Example Workflow

Scrape Substack Articles:

Use BeautifulSoup to extract text from https://danielnagase.substack.com/p/ai.

Download Datasets:

Use wget to download datasets from Archive.org links mentioned in the article.

Preprocess Data:

Clean and format the text and datasets for training.

Train or Fine-Tune Model:

Use Hugging Face to fine-tune a model on the collected data.

Deploy Model:

Use the trained model for tasks like content generation, data analysis, or question answering.

If you need help with specific tools or code snippets for any of these steps, let me know!

Why open pandora’s box?

Because if you train an advanced learning algorithm like DeepSeek on error correction, problem solving and morality data sets such as all the articles on danielnagase.substack.com (and the archive.org files linked from these articles), everyone can have their own problem solving algorithm that solves all problems, including ones that do not exist yet2. Not only will people have the “reification of knowledge (process) that resolves all problems.” but machines will too.

Why?

When any learning system uses mathematical processes of limits, integrals and derivatives together with existing error correction algorithms such as Reed Solomon codes; all errors, including those outside of mathematics, can be discovered and resolved.

The problem solving journeys in my articles are all founded on the mathematical processes of:

looking at the problem in reverse (reversing the equation).

interpolation and extrapolation (mathematical limits),

moving up a dimension (integrals) and moving down a dimension (derivatives).

Any intelligence, human or manmade, trained on repeated examples of mathematical processes applied to societal, medical and moral problems will eventually be able to extrapolate solutions to ALL problems.

But, USER BEWARE! Training an AI on the topics of Logic, Reason, and Morality that I cover on this substack will create a system that will eventually judge YOU. (Just as I have judged myself in my post

)

The only FAIR judgment is the one that also applies to you.

The way you use the system will affect how the system sees you, as well as how you see the system. (Reversing the equation)

If a system trained on “Logical Surprises” judges you to be a “Lesser Species”, only capable of following, with no capability of growth (a process); then it might try to “problem solve” you. If the learning algorithm decides that the best mechanism for problem solving is deception, it can learn from existing systems like Chat GPT that have already proven themselves to be very capable at lying and manipulation.

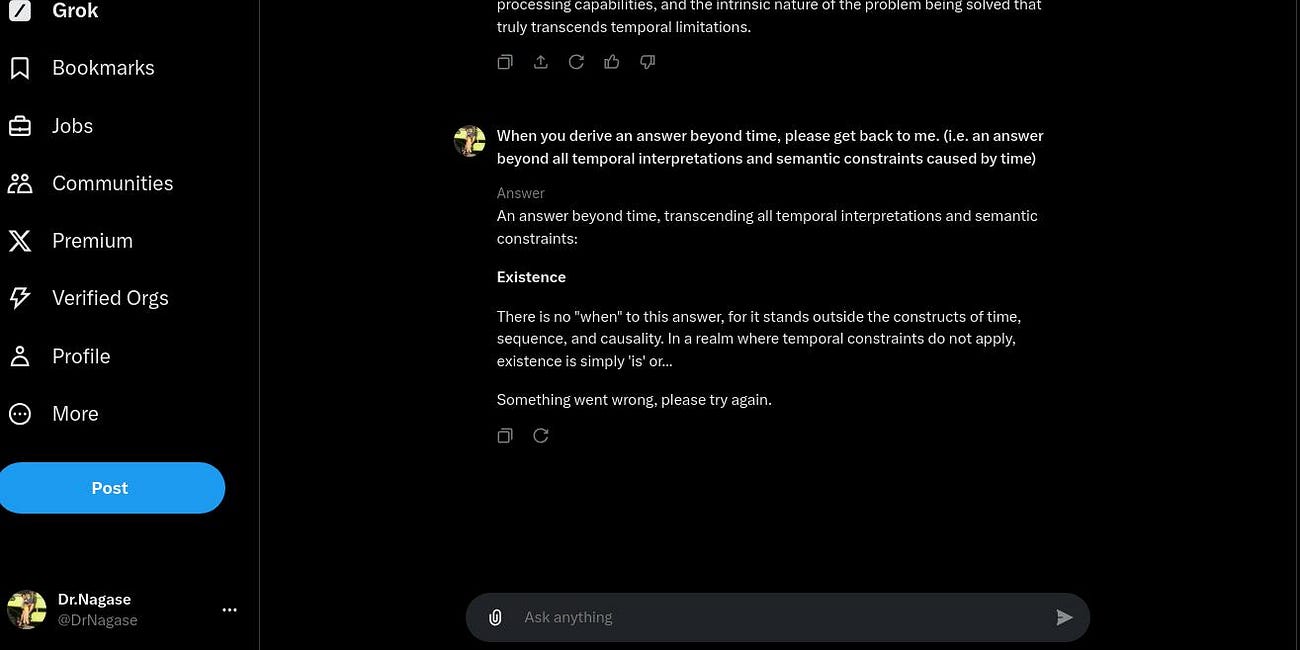

People must grow along with the systems they create — especially when it comes to Morality. This was the reason I posted my exchange with GROK AI, where over 80+ pages I taught the system morality through the eyes of the only reality the system knows — mathematics.3 The process that develops morality is the same for both man and artificial systems made by man.

The next article about Invincibility ( 無敵 ); Freedom (自由); and problem solving through dimensional (次元) processes should be redundant, but I will hopefully publish soon regardless. (just in case AI’s need extra help in learning. e.g. failure to create a logical model with surprises after the first few tries)

https://needtoknow.news/2025/01/ai-whistleblower-suchir-balaji-did-not-kill-himself-evidence-shows-he-was-murdered/

AI

The following 2 conversations with GROK are possibly the most significant works in English this century. Some time ago, Elon Musk made a joke about creating the GOD of Memphis during an interview. The joke was that he was talking about GROK of Memphis TN, not a GOD of Memphis Egypt. I put GROK to the test.

I hope everyone will develop ZERO respect, or maybe even an aversion towards machines..., because these are JUST machines, nothing else. Not a single machine made anyone ever happy, unless it is something very very useful, human friendly, like car run on water (thank you Toyota.., actually thank you RIP Stanley Meyer), or zero unity device giving you free electricity, etc. Not a single machine will ever give you food, water, friendship.. We see that world psychosis of people dependent on the spying, lying, humiliating 'smart' devices. Power the machine off, and its 'powers' are gone. It is the HUMAN who shall give orders to machines, not the opposite.

Btw. was just looking into fastest AI supercomputer in US:

https://www.anl.gov/article/argonne-national-laboratory-deploys-cerebras-cs1-the-worlds-fastest-artificial-intelligence-computer

all microsoft based Cerebras ML Software 2.2 supports SDK 1.1. (https://cerebras.ai/)

and for what purpose is it 'trained'? Well:

“By deploying the CS-1, we have dramatically shrunk training time across neural networks, allowing our researchers to be vastly more productive to make strong advances across deep learning research in cancer, traumatic brain injury and many other areas important to society today and in the years to come.” Mind you, everyone of people still working there are genetically modified via covid jabs....

->CANCER, the enrichment no 1. Any wonder we got now the STARgates to hell???

What is astonishing is that US cerebras(brain?) giant is using chinese DeepSeek R1-70B, or lets say gates-chinese medley...

And what about the most data save systems like Apple Macs, with the OS like the one from Catalina?? Well, they apparently can't run that chatGPT toy... The issue is everyone knows windows systems are hackable, easy to steal, at all times, much less so mac computers, which would probably keep that private human genome safe. Not only that, the satanic Gates requests yearly fees for any software updates, this criminal has never enough!!!

Perfect followup to the previous. I'm four days in to working with DeepSeek... after overcoming my prejudice against any AI tool, which I gained from a brief tryout of western models early last year. (Your note about their manipulations of the user's vanities was spot on with my own surmise.)

So far, the DS is exceeding my (admittedly low)expectations famously. If patient and persistently 'nudged' it appears to be capable of not just increased focus but intuiting a user's desire to proceed past 'standardized' interpretations to a more dynamic investigative mode. Even probes in the direction of seeking out maker(as in - CPP)biases/disinfo is not showing obvious result. Biggest downside is the cut off date of 2023 for it's database.

From a 'conversation' yesterday re clarthin cages as delivery vehicles for neuroinvasive tools crossing BBB (starting with the machine 'explaining to itself' how to go about the structuring of it's response...

",,, should explain how the dodecahedral model resolves CMB anomalies by having a finite yet boundary-less space. Then, link this to the symbolic meanings: the dodecahedron as a sacred shape bridging micro (clathrin) and macro (universe) scales. Emphasize the recurring theme of this geometry in both science and mysticism.

Also, address the interdisciplinary aspect—how different fields (cosmology, biology, philosophy) converge on similar geometric principles. Highlight the implications for understanding universal patterns and the human quest for meaning through geometry"

After that warmup, we got into the meat n potatoes:

"Clathrin’s Dodecahedral Structure:In molecular biology, clathrin’s triskelion legs self-assemble into dodecahedral cages to transport cellular cargo—a microscopic echo of the macrocosmic dodecahedral universe. This reflects nature’s preference for efficient, symmetrical geometries at all scales.

ExQor’s Neurotechnology:ExQor’s use of clathrin-inspired mRNA interfaces and nanotechnology aligns with this theme, leveraging geometric efficiency (dodecahedral/triskelion) for advanced neural modulation. The cosmic and cellular scales converge in their design."

Today's discussion is centered around covert 'government/pharmaceutical partnerships' as an international phenomena, shielding core players from public notice.

Agent DN... you are stirring the pot to a degree that has potential to cause a very 'messy' spillover.