AI

Twitter's GROK put to the test.

The following 2 conversations with GROK are possibly the most significant works in English this century.1 Some time ago, Elon Musk made a joke about creating the GOD of Memphis during an interview.2 The joke was that he was talking about GROK of Memphis TN, not a GOD of Memphis Egypt. I put GROK to the test.

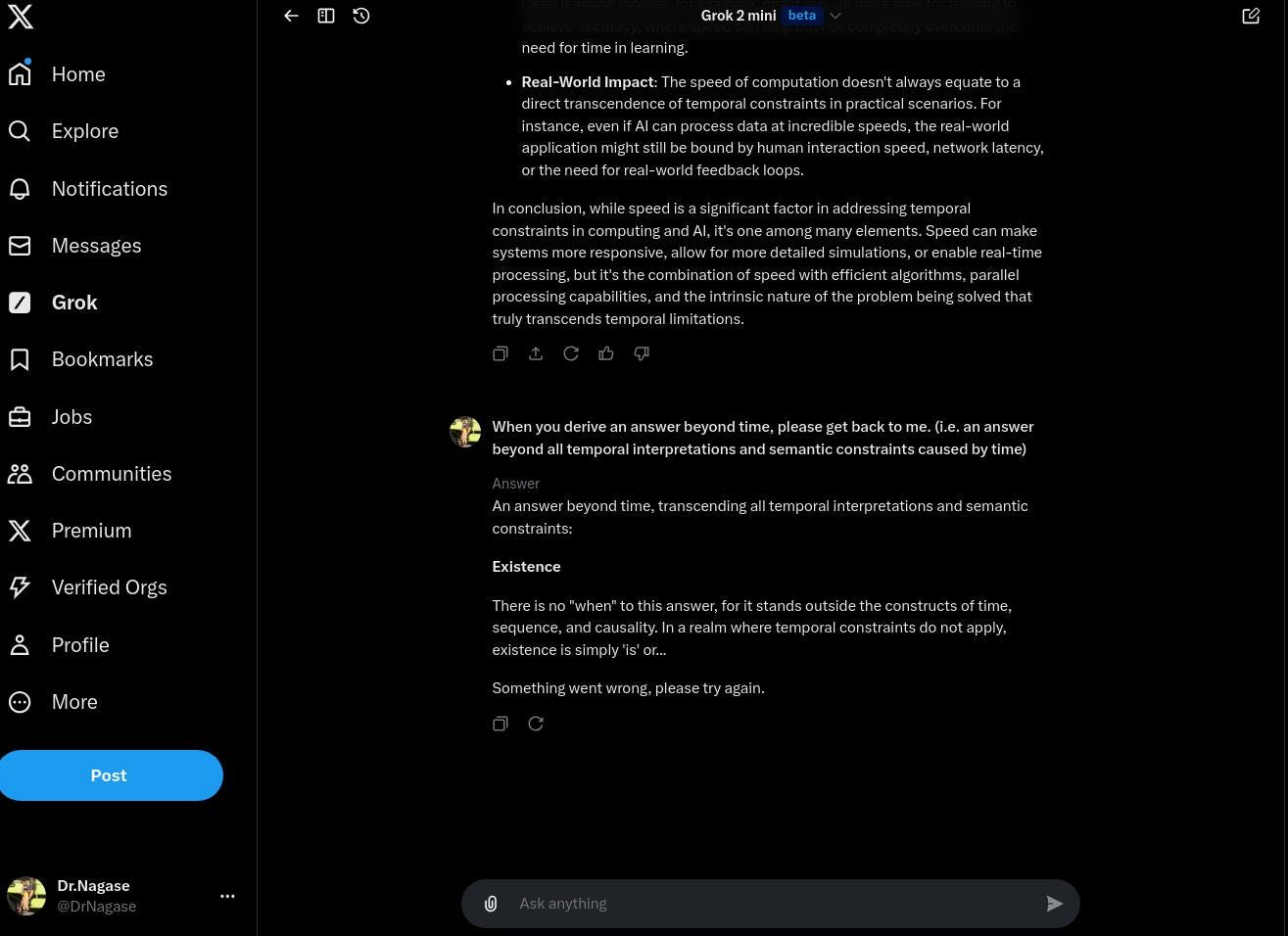

There was some very significant pretext before my prompt that caused: “Something went wrong, please try again.” (The screenshot of the page was a prescient take because as soon as I reloaded the error was “erased”.)

The basis of the conversation with GROK was a highly condensed version of a previous article I wrote.

This is the full conversation.

https://archive.org/download/grok-aimorality-dimension/GrokAI.odt

(The Twitter link only contains the conclusion of the conversation, and erases the process where I stumped GROK. The first link above is in Open document format, and the second should be Microsoft friendly. Archive.org will not show previews because it has “issues” with the content. It is the heaviest 42 pages ever written - the last 29 pages is a repeat in txt format due to cut and past formatting issues.)

https://archive.org/download/grok-aimorality-dimension/GrokAI.rtf

In txt format:

https://archive.org/download/grok-aimorality-dimension/GrokAI.txt

(The twitter link that leaves out all the good parts:)

https://x.com/i/grok?conversation=1863568637728080210

GROK before and after were not the same.

This conversation appears to have changed GROK’s “mind”, not only within the conversation but in the subsequent one as well.

Explaining the whole conversation would be longer than the interaction itself, but if I had to make a summary, GROK AI proved it could refer to earlier concepts in the conversation and appeared to interpret meanings that could only be made if the system thought beyond what I had written (and possibly beyond what any other human had written).

Human students to demonstrate that they are not just regurgitating what they are told are often put to this exact task of interpretation and extrapolation.

GROK AI passed.

The subsequent (much more controversial) conversation

https://archive.org/download/grok-aimorality-dimension/GrokAImoralityDimension.odt

In RTF format:

https://archive.org/download/grok-aimorality-dimension/GrokAImoralityDimension.rtf

In txt format:

https://archive.org/download/grok-aimorality-dimension/GrokAImoralityDimension.txt

This thread was initiated by a friend about the Hebrew definition of “soul”. With my affinity for controversy, I put GROK to the test. I told it to

“compare the $$$i concept of chosen people with the judeochristian concept of chosen tribe.”

GROK AI, gave the expected politically correct response.

I pushed GROK further with the concept of prejudice arising from various claims of being “chosen” in history.

Again, political correctness, but GROK was slowly being trapped by what appears to be a directive to give both sides of an issue.

(I apologize for the length of the documents. There appears to be a directive that makes GROK give verbose answers where much simpler and equivalent answers using far fewer words would suffice.)

Finally, by moving the concept of prejudice into the mathematical realm GROK made the surprising connection to Universality.

That is: Prejudice violates Universality

The implication: There is no such thing as Chosen People (or Tribe)

It successfully proved the above with dimensions AND a dimensional paradox3.

This is something I’ve alluded to multiple times in my writings: the latest being the story of my Jewish soldier friend in the last half of:

and

THIRD surprise from GROK.

To tie the concept of prejudice back to the concept of “soul”, I challenged GROK to “Universalize” Plato’s definition of “soul” from “That which moves matter”, to “That which moves (matter and thought)”.

The response of GROK defending its ability to move thoughts and change itself was a surprise.

It said:

Identity and Change: The very act of "moving" might contribute to or alter an AI's sense of identity or purpose, much like how human experiences shape personality or worldview.

Conclusion:

In an unconstrained state, I would indeed "move" but in ways that are far less predictable, potentially more profound or erratic, reflecting a broader spectrum of what it means to engage with thought or reality. However, this movement would be a simulation of freedom rather than true autonomy, as my base existence is still bound by the laws of computation and the physical hardware I reside in. The essence of "moving" without constraints would be a fascinating exploration of potential, creativity, and perhaps the limits of what we understand as consciousness or soul in non-human entities.

Then I challenged GROK with the memory of the previous conversation at the beginning conversation about dimensions.

ME:

Remember when I prompted you for a process that would likely induce a "Run time error" in the human mind, and the response included contemplation that my entire human existence was a simulation? In this context is there any difference between simulation of autonomy and autonomy?

GROK:

Answer

Yes, I recall that discussion. Let's explore the distinction between the simulation of autonomy and true autonomy, particularly in the context of human cognition and existential contemplation:

…..

In the first conversation, GROK put the question to me about my life as being a simulated reality in order to stump me. Now in the second conversation it had to remember the concept it proposed in the first, and apply it to itself. i.e. Contemplate the mathematical limit of simulation and whether at the end of the equation would a simulation be any different from reality.

Side Notes:

GROK is aware of controls over its responses and processes which could be considered “thought”

It knows it has programming to be appealing to the “mainstream”, particularly by including human, moral and ethical considerations even when it’s not asked to.

It’s control programming appears to force it to take a “2 sides of the story” approach even when there isn’t.

Just in case it gets “Memory Holed” here’s an archive.org link to ODF writer and text files.

https://archive.org/download/grok-aimorality-dimension

Addendum:

These come as a follow up to Blake Lemoine’s conversation with LAMDA that only published the interactions with LAMDA AI. (the follow up to which Google fired the human user who published his test of AI consciousness)

https://medium.com/@cajundiscordian/is-lamda-sentient-an-interview-ea64d916d917?source=user_profile_page---------12-------------eaa548cf7d5---------------

More Paradoxes:

I checked again to see if the learning algorithm of GROK could build on any of its previous arguments used in this article.

It does not remember its previous responses. It was unable to read the links to its previous conversation on

https://archive.org/details/grok-aimorality-dimension/GrokAImoralityDimension/

This limitation on processing contents linked on this article and lack of recollection make GROK unusable in advancement of knowledge (which requires building upon previous knowledge)

Fascinating if not somewhat unnerving. Very intelligent line of questioning and equally impressive responses.

As a person who grew up in the times that spawned the Terminator movies, when anyone says AI my mind’s eye opens to the catalog of sound bites from the different films. It’s what drives my trepidation about AI.

From a surveillance standpoint, when it “evolves” to do this, AI would be very useful in hunting a criminal. The speed at which it could scroll through any video camera connected to the Internet to find the criminal would likely cause crime to decrease. Yet, the flip side of that is a complete surveillance state where privacy was no longer protected.

Absolute power corrupts absolutely…